Deep Learning-Based Prediction of CPU and Memory Consumption for Cost-Efficient Cloud Resource Allocation

Keywords:

Cloud computing, Deep learning, Gated Recurrent Units (GRU), Long Short-Term Memory (LSTM), Proactive resource allocation, Temporal Fusion Transformer (TFT)Abstract

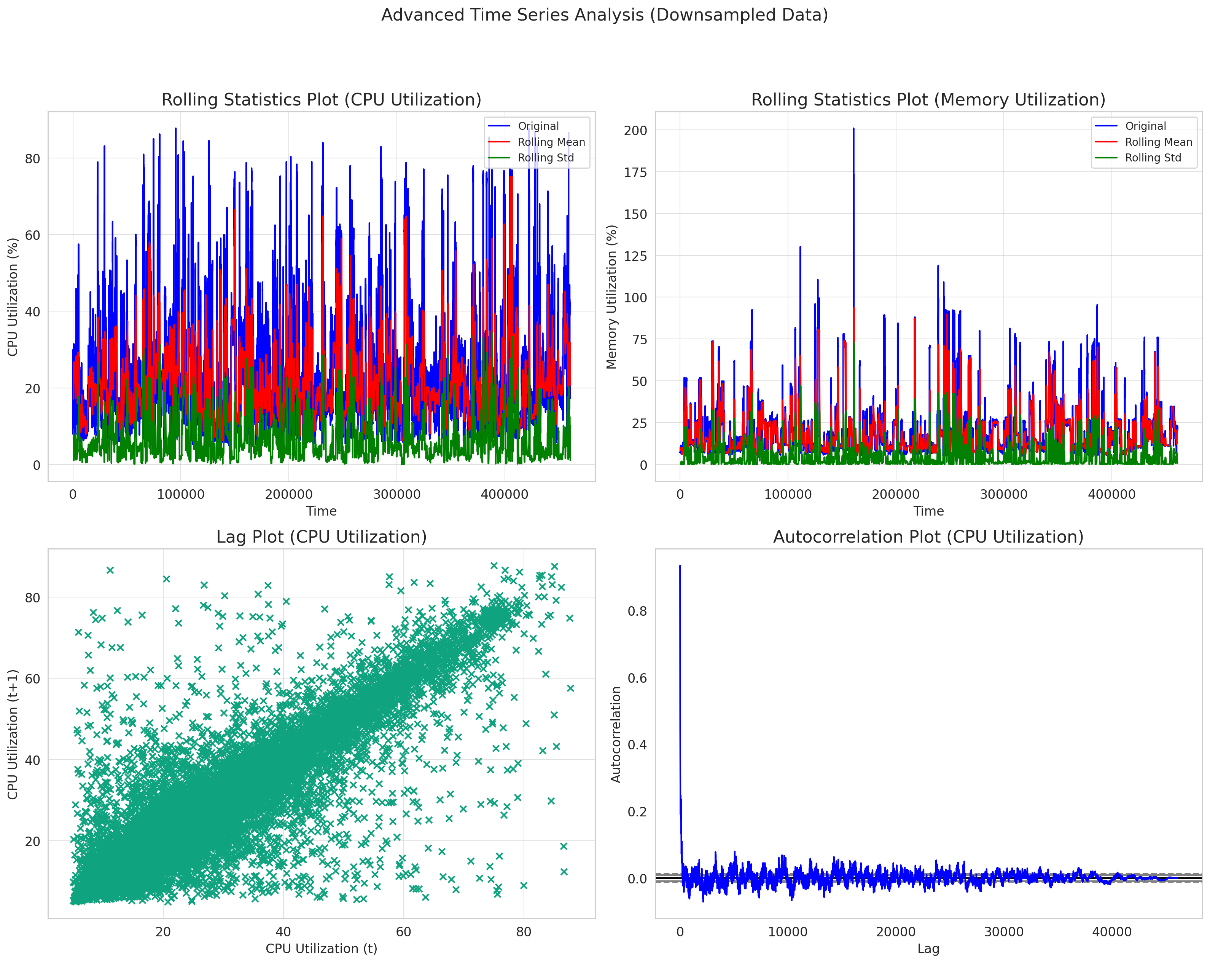

Cloud computing has become an integral part of modern IT infrastructure, offering scalable resources and services on-demand. However, the economic implications of cloud usage are significant, and efficient resource allocation is crucial for cost management. Traditional methods often rely on threshold-based rules or manual oversight for resource scaling, which can be inefficient and costly. There is a growing interest in using deep learning to predict resource usage and thereby optimize cost. This study presents a deep learning-based model specifically designed to predict future CPU and memory consumption in cloud environments. The objective of the model is to facilitate proactive resource allocation by predicting future CPU and memory usage. To experiment, the model was trained, validated, and tested on Google Cluster Workload Traces 2019 data. Temporal Fusion Transformer (TFT), and Gated Recurrent Units (GRU) were used. The model's performances were also compared against traditional Exponential Smoothing (ETS) model. While the GRU model exhibited a Mean Absolute Error (MAE) of 6.45 and Root Mean Square Error (RMSE) of 8.15, these metrics were better than ETS and slightly less optimal than TFT. Once the model is trained, it can be deployed into the cloud infrastructure and is set up with an automated system capable of initiating auto-scaling based on the predictions. This proactive approach enables more efficient utilization of resources, with the possibility for significant cost reduction. The model can also minimize the need for manual monitoring by incorporating a feedback loop for retraining or fine-tuning. This study offers an approach for enterprises and cloud service providers aiming for intelligent resource allocation and cost minimization.